After studying various examples of electronic that focuses around bass, I felt that it was important that I become more proficient in the use of two widely used waves plugins – RBass and Maxxbass. The two plugins both use harmonic processing in order to achieve different results.

First I will look at exactly what the plugins do, and how they do it.

RBass – After conducting some resarch into the plugin (the Waves website wasn’t much help!), this video seemed to explain the function pretty well

RBass increases perceived bass response by using an algorithm that creates harmonics in order to fill in missing fundamental frequencies further up the frequency spectrum. This allows the bass to be heard better across playback systems with less low end response, and also allows the bass to be heard at lower volumes

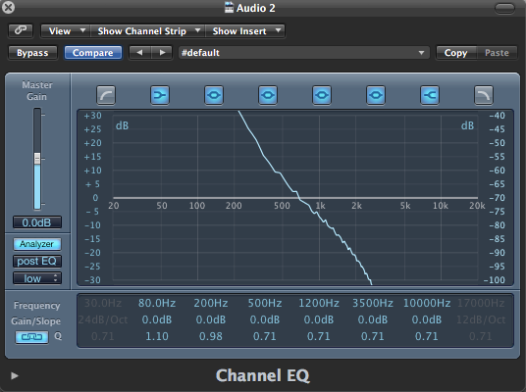

Here we have the frequency response of a 60hz sine wave, but with the scale on the analyser pulled down so that we can see the upper tail of the frequency response.

after applying some Rbass, you can see that some new fundamental frequencies are created. Upon listening, I can hear that the bass has a slight “buzz” that gives the bass more presence, especially on smaller speakers.

Now for MaxxBass

Upon researching into what Maxxbass does, it has become apparent to me that the plugins are in fact very similar. Like RBass, Maxxbass generates harmonics that trick the human ear into hearing the fundamental, even if the playback system can not generate the fundamental frequency.

While their function is very similar, maxxbass gives you far greater control over this tool, by giving you control over inout signal, original bass, and maxxbass. There are also tools for crossover, different presets, and control on dynamics. This makes the maxxbass (in theory) superior to the RBass.

As you can see above, Maxxbass (with the same output db as the RBass example) creates more frequencies, and extends further up the frequency spectrum. This allows for greater audibility on smaller speakers.

Howvever upon A/B’ing the two examples, I found that while Maxxbass gave greater high end extension and transferability, RBass added a weight to the low end which was very appealing to listen to.

This different is subjective from my listening, but the conclusion I can draw from this comparison is that Maxxbass is good for making bass transferable across small speaker systems, whereas Rbass is good at adding weight to low end instruments.

Both are good alternatives to EQ’ing the bass, which can often result in bass eating up too much headroom in the mix.

I plan to use the two plugins where appropriate in my own work, relevant to my findings.